If the customer uses Azure Services and wants to use those Services to index documents for Metric Insights Concierge to search, this article describes how to set up this index.

NOTE: The only models fully supported by the Metric Insights Concierge External Resource feature for utilizing Azure AI Search are GPT-4o and GPT-4-Turbo deployed in Azure. It will not work for non-Azure models or non-OpenAI models deployed to Azure. This feature also has not been tested with any other OpenAI models available on Azure.

Prerequisites

1. Prepare the Documents

All the documents that will be indexed have to be downloaded locally for use.Upload all the documents that you want to search in Concierge into the Azure Index.

Recommended best practices:

- Convert your PDF or DOCX documents to plain text or markdown. Review the conversion results before handing the document to Azure for indexing to avoid issues (e.g., data loss, unwanted artifacts, or the absence of support for tables).

- Documents should be no longer than 65,000 characters. Azure will crop anything more than that.

- Shorter documents are preferred, especially if each document represents a self-contained unit of knowledge such as a single question and answer.

- Each document should include a clear title or caption indicating its contents.

- If a document is divided into smaller parts, use the original title along with a specific title for each part.

2. Create an Azure OpenAI Resource

If it wasn't done previously, create an Azure OpenAI Resource. For detailed instructions about how to do that, take a look at this article.

1. Set Up an Azure Index

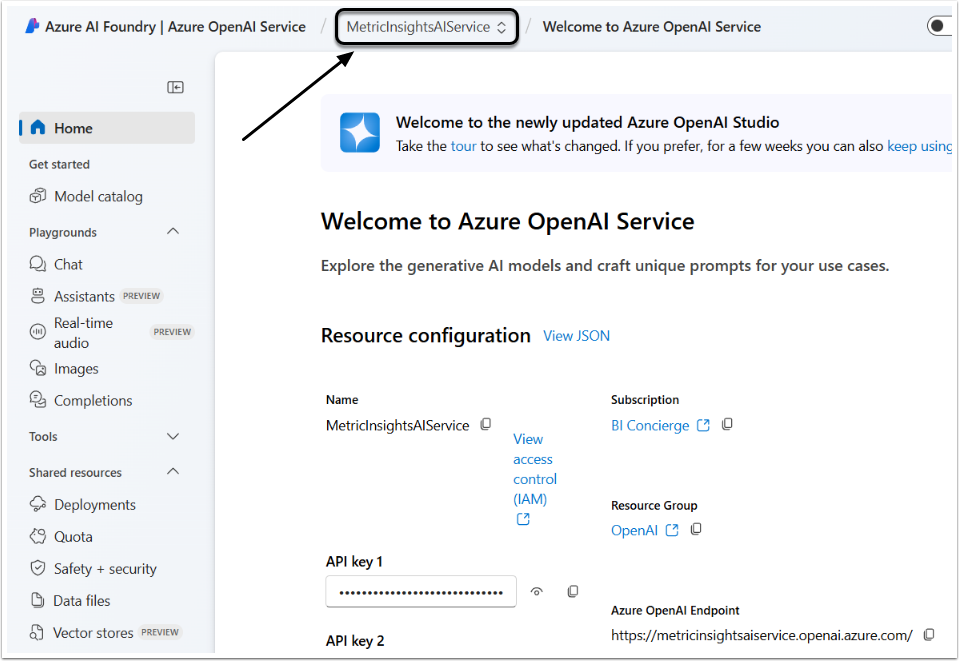

1.1. Open Azure OpenAI Service Studio

Access Azure OpenAI Service

In the Resource drop-down menu, choose the Azure OpenAI Resource, created previously.

1.2. Create Deployment for the Latest GPT Model

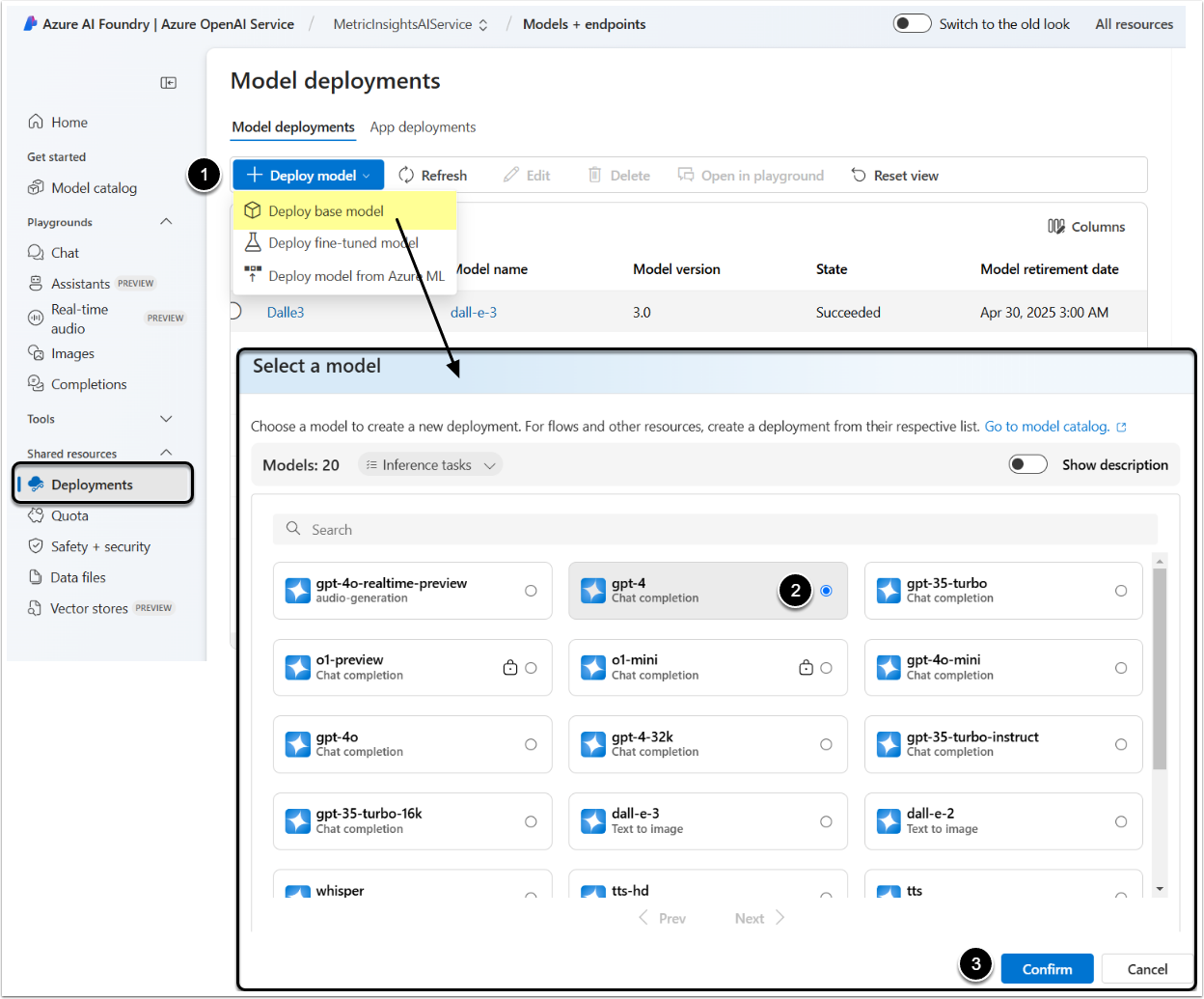

Access Shared resources > Deployments

- [+Deploy model] and select "Deploy base model" from the drop-down menu to open the selection window

- Select gpt-4o or gpt-4-turbo model

- [Confirm]

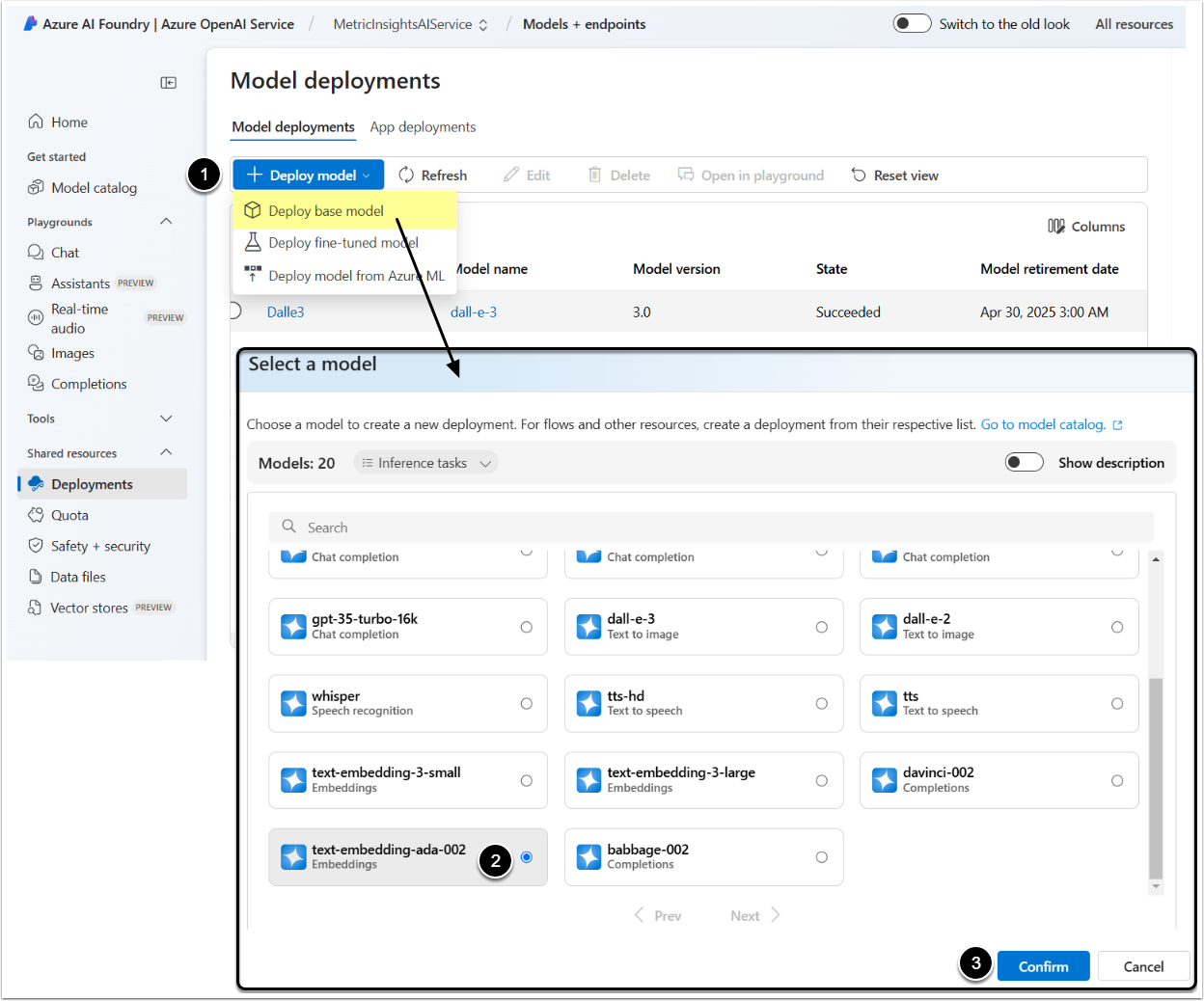

1.3. Create Deployment for an Embedding Model

- [+Deploy model] and select "Deploy base model" from the drop-down menu to open the selection window

- Select an embedding model

- [Confirm]

NOTE: Copy the name of the embedding model; it will be needed later to set the Concierge External Resource.

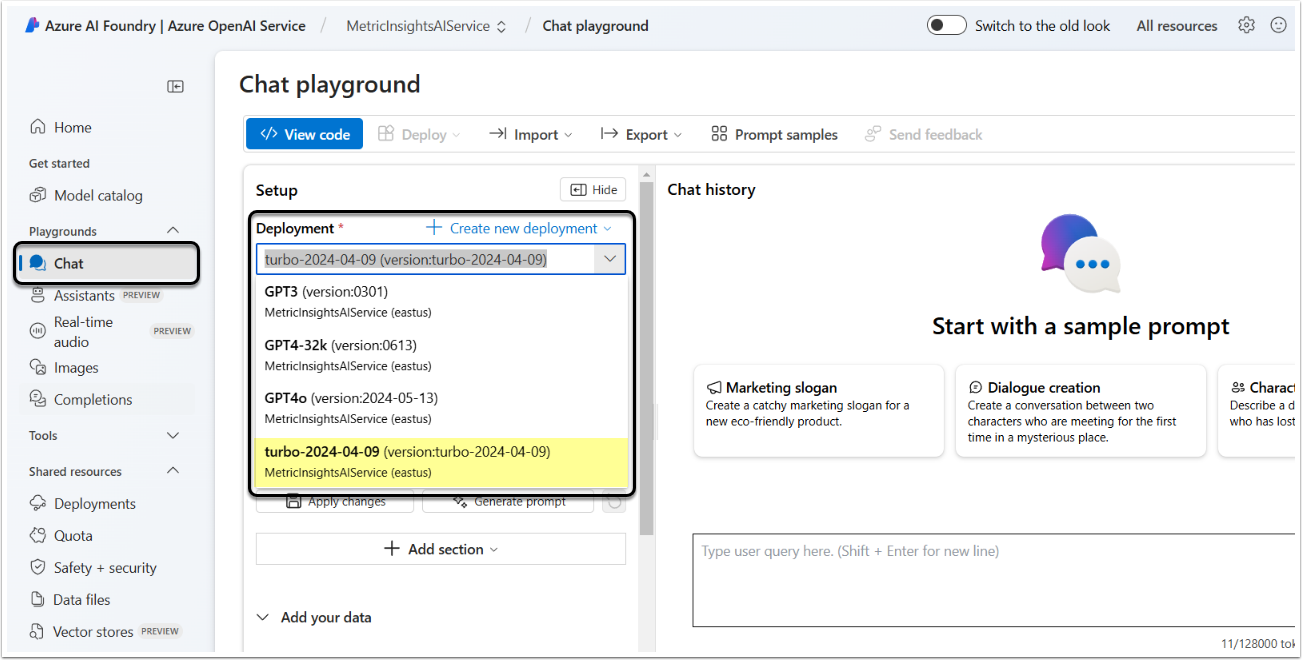

1.4. Select Deployment in Chat

Access Playgrounds > Chat

Click the Deployment field and select the GPT-4 model that was deployed before.

1.5. Add a Data Source

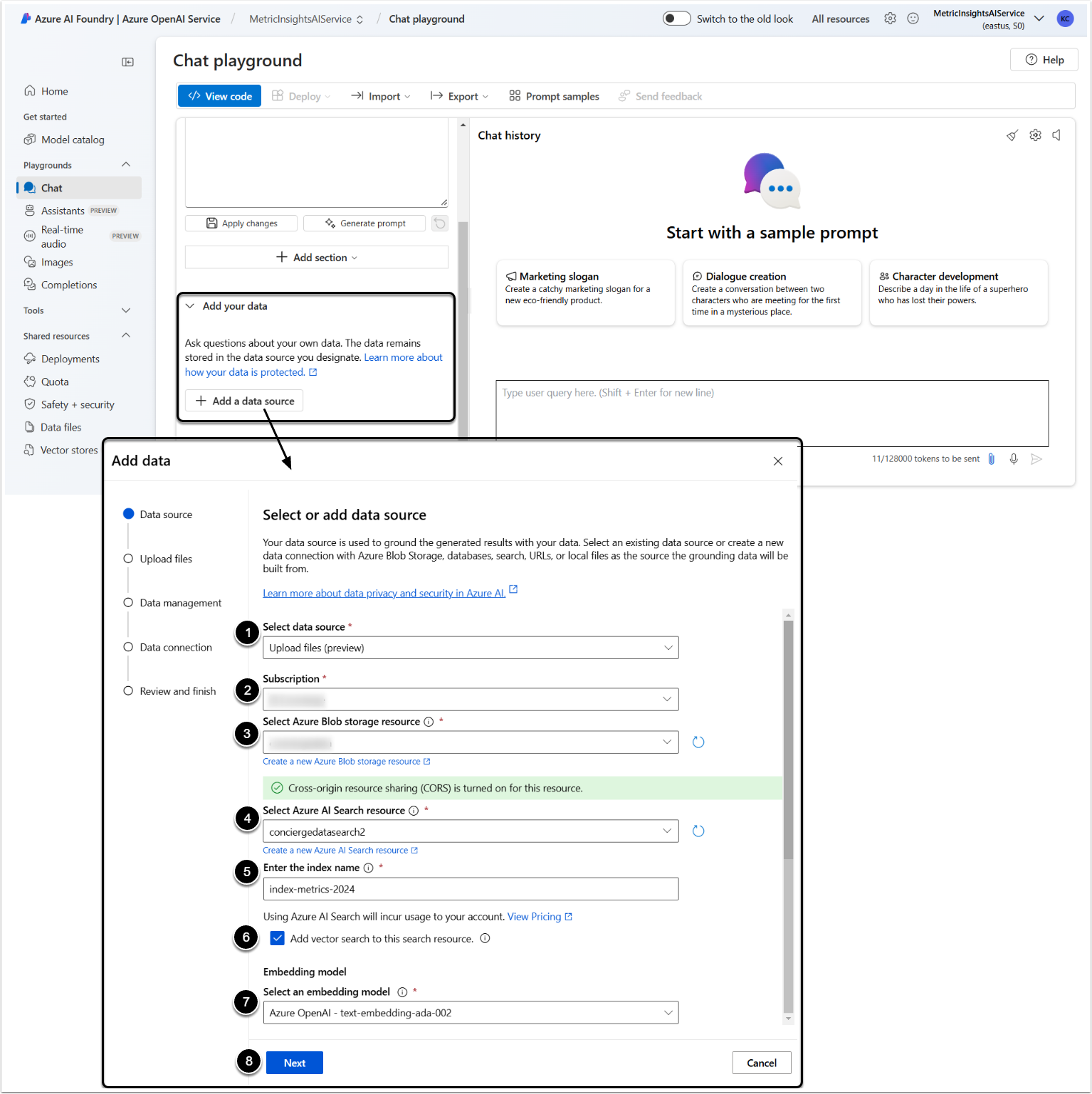

1.5.1. Fill in the Basics

Scroll the Setup section, unfold Add your data and click [+Add data source]

- Select data source: Choose the "Upload files (preview)" option

- Subscription: Select the subscription

- Select Azure Blob storage resource: Select the data storage resource. If it wasn't done previously, the storage resource can be created using the link under the field. You can find detailed instructions about it in this article

- Select Azure AI Search resource: Select an Azure AI Search Service. If it wasn't done previously, the search resource can be created using the link under the field. You can find detailed instructions about it in this article

- NOTE: An Azure AI Search resource is a paid resource. Creating one will add monthly fees to your current subscription

- NOTE: When creating a new Search resource, make sure to set Resource Group and Location the same as the chosen GPT-4 model

- Enter the index name: Type the name that will be used to reference this data source

- Make a note of this index name, as you will need it for configuring your Metric Insights External Resource

- Select the Add vector search to this search resource checkbox

- Embedding model: Select the embedding model deployed previously

- [Next]

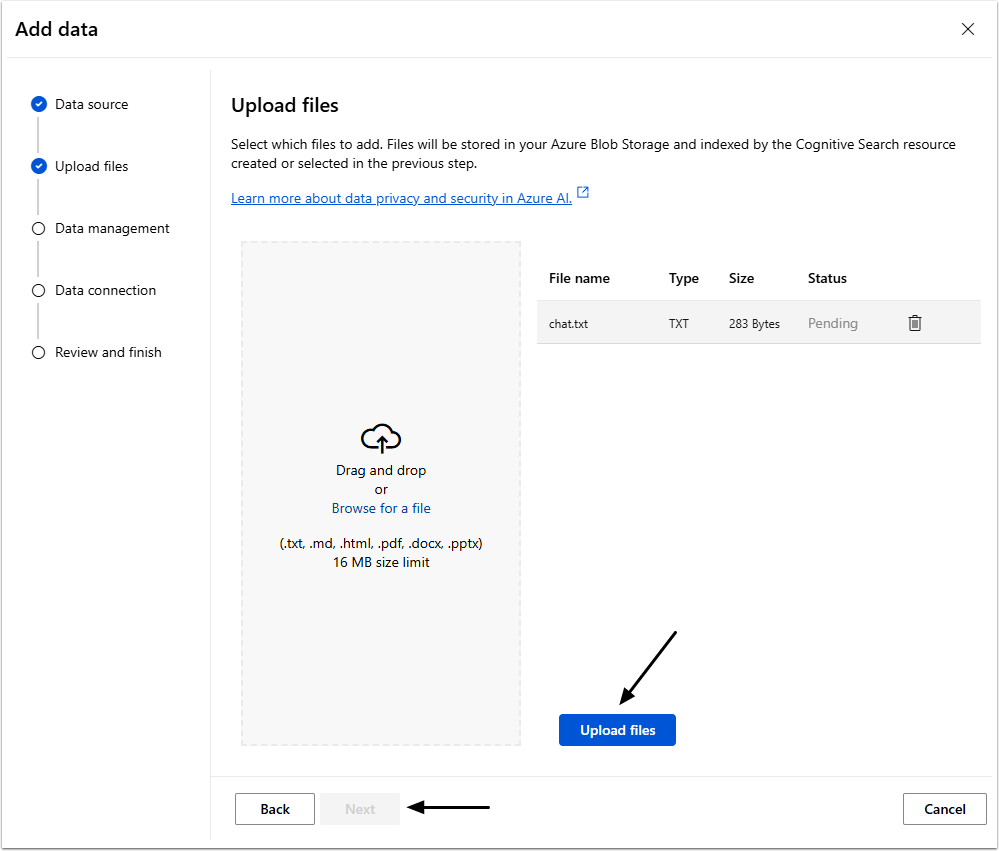

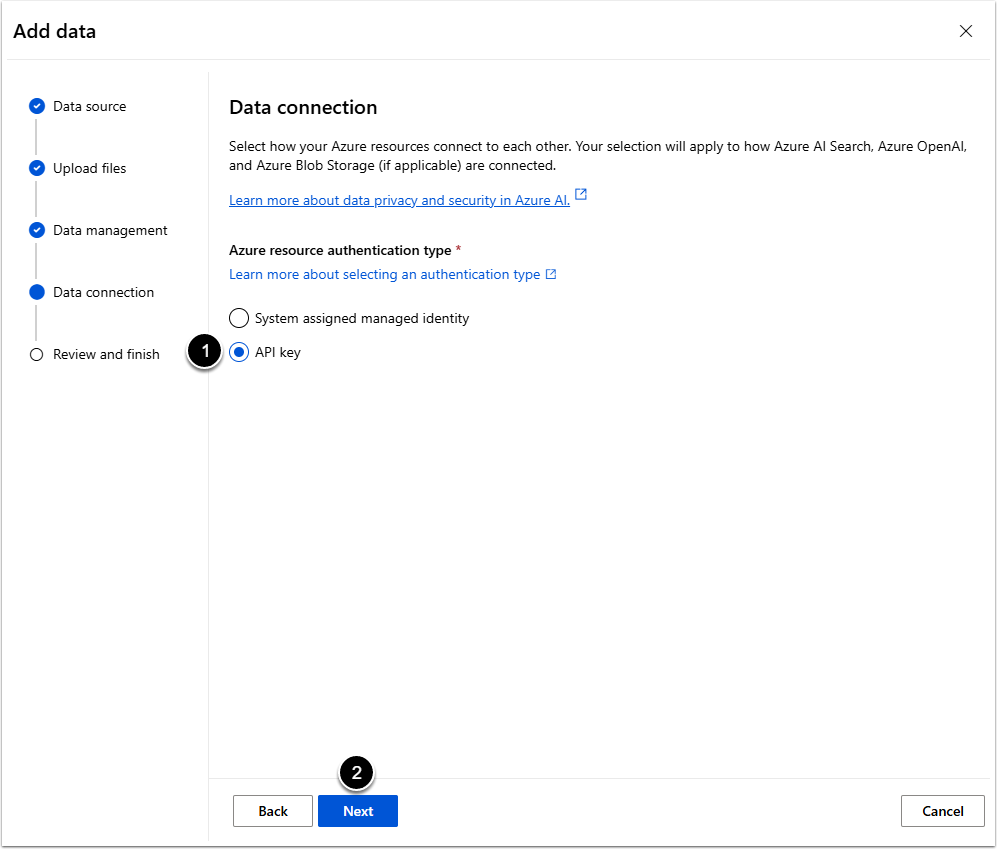

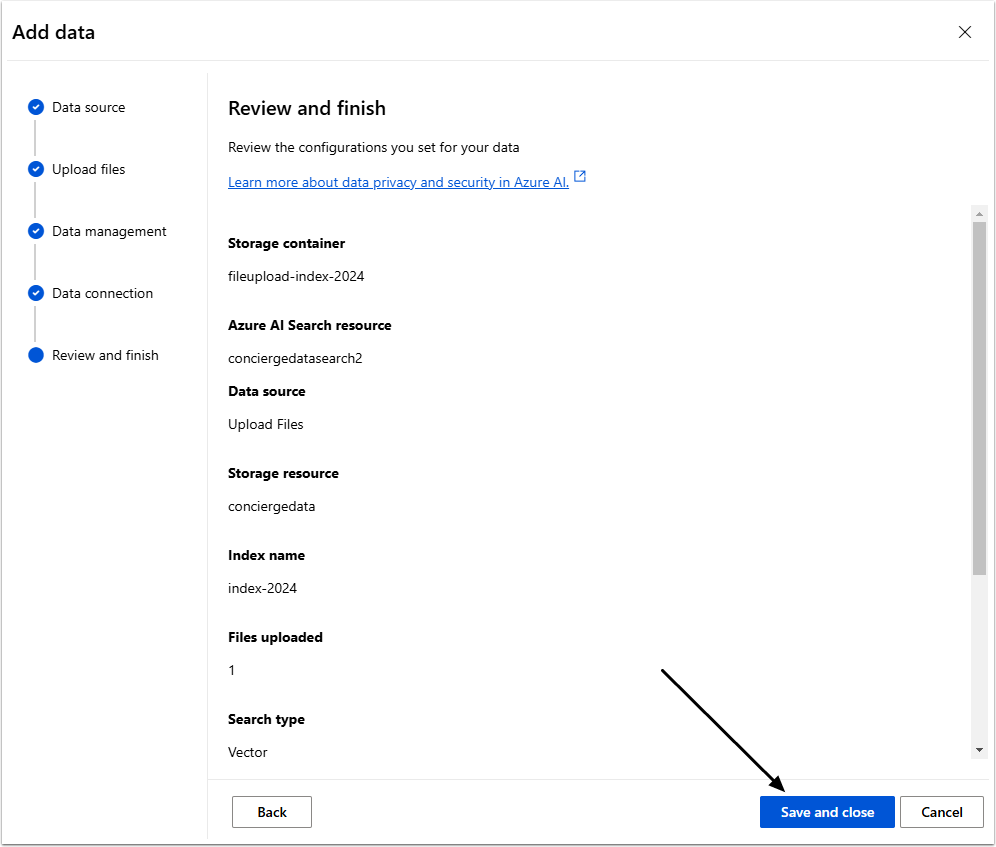

1.5.2. Upload Files

Drag and drop all the documents into the field, click [Upload files] and then [Next].

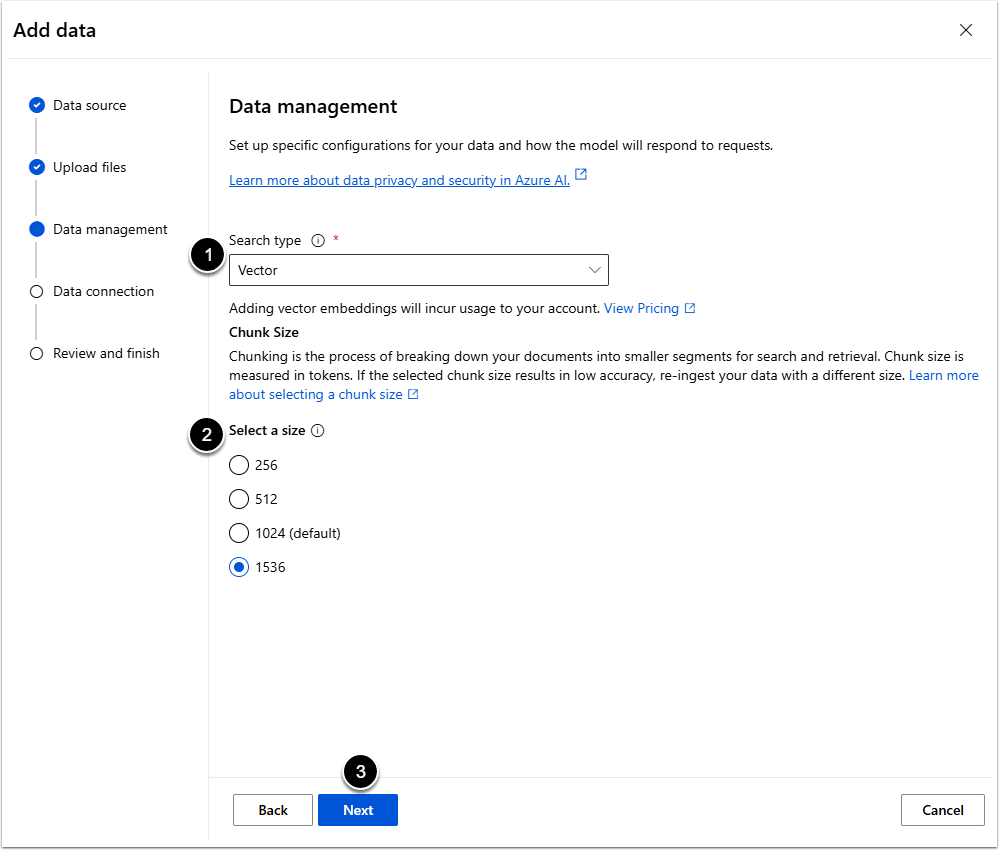

1.5.3. Deal with Data Management

- Search type: Select the "Vector" option

- Select a size: Choose the "1024" or"1536" option. Selecting smaller size is not recommended

- [Next]

2. Configure Concierge External Resource

NOTE: Be aware, that only one External Resource Configuration is supported by Concierge at this time. It is recommend to place all the documents to be searched through an Azure Index in the same index.

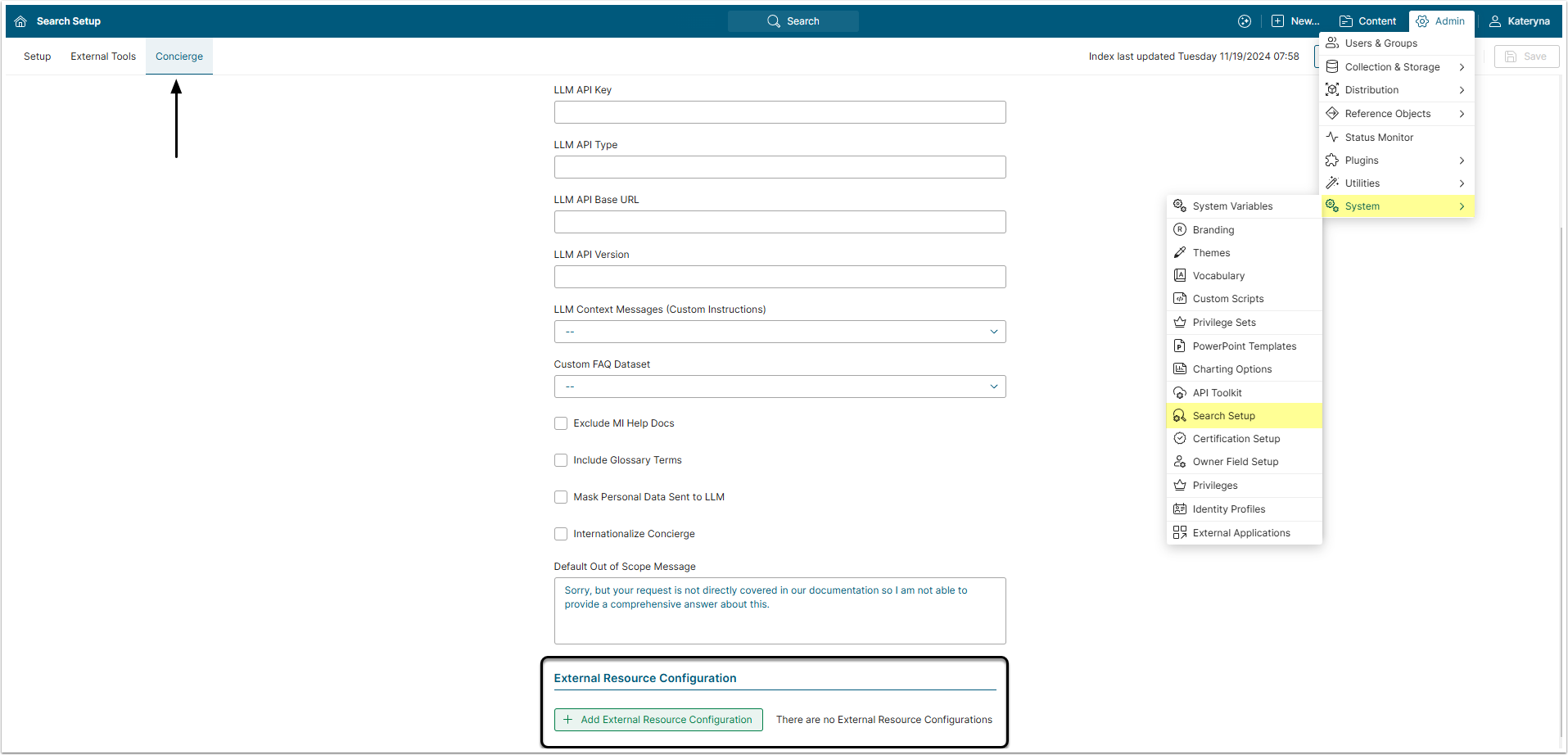

2.1. Open Concierge Settings Page

Access Admin > System > Search Setup and go to Concierge tab

NOTE: In 7.1.0 version the path has changed to Admin > System > Concierge Setup > Content Sources tab

External Resource Configuration section is at the bottom of the page.

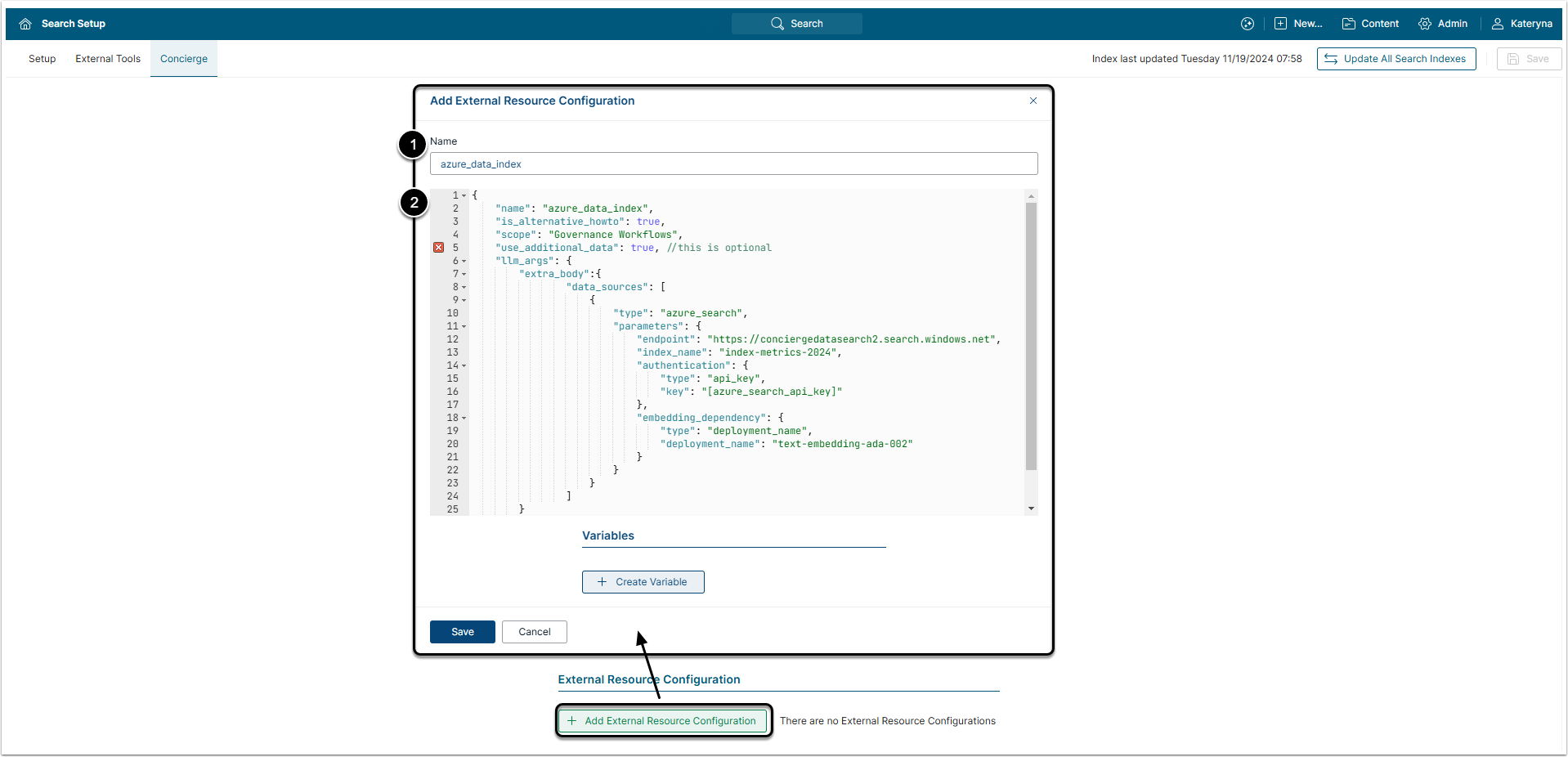

2.2. Add New External Resource Configuration

Click [+Add External Resource Configuration] to open the pop-up window.

- Name: Give the Configuration a descriptive name.

- Enter the Configuration code. You can use an example below, but don't forget to modify it according to your specific information.

{

"name": "answer_with_azure_data",

"scope": "",

"llm_args": {

"extra_body": {

"data_sources": [

{

"type": "azure_search",

"parameters": {

"endpoint": "[endpoint url]]",

"index_name": "[name of index in azure]]",

"authentication": {

"type": "api_key",

"key": "[azure_search_api_key]"

},

"embedding_dependency": {

"type": "deployment_name",

"deployment_name": "[name of deployment]]"

}

}

}

]

}

}

}Modify the following:

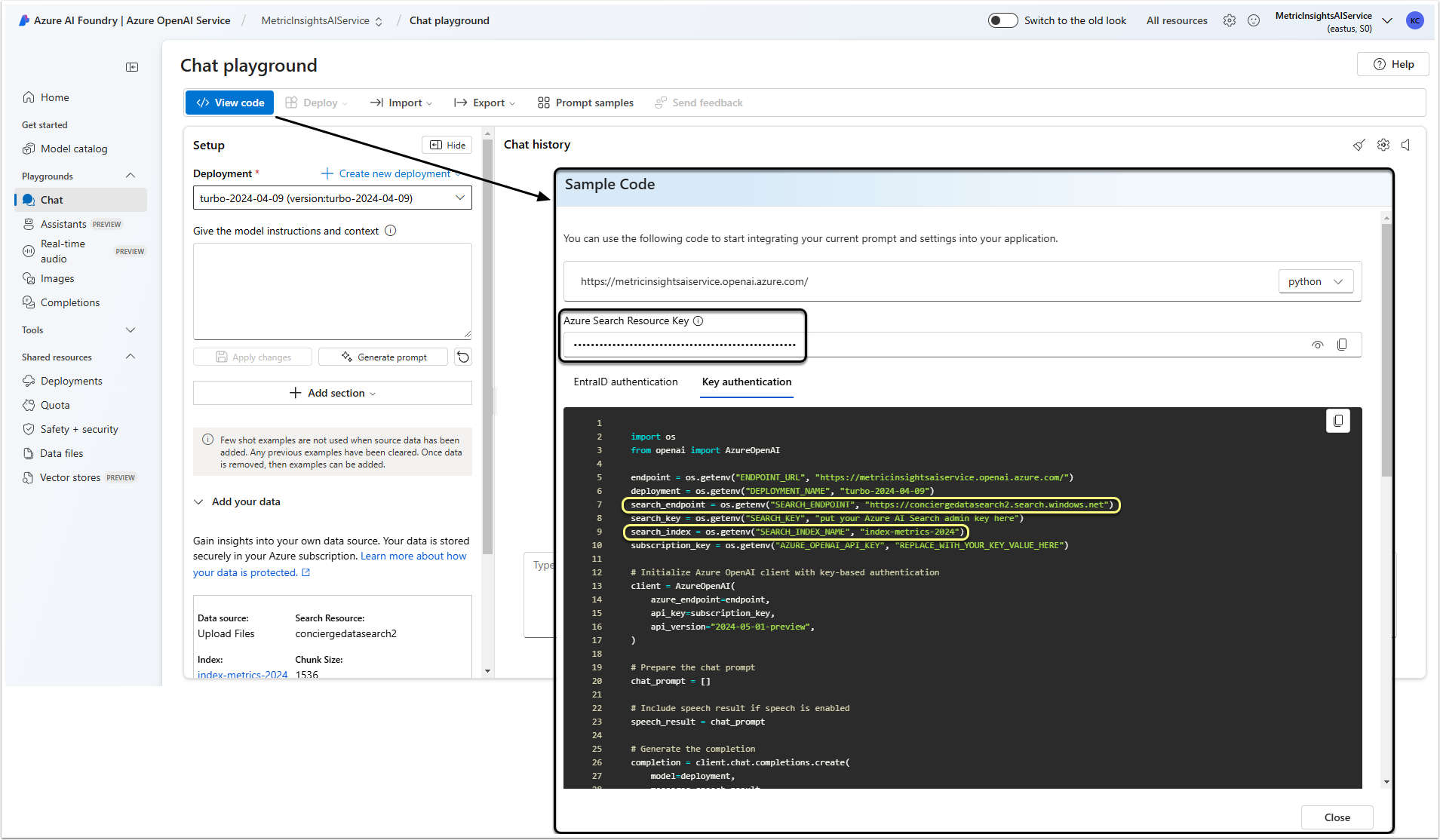

name: Enter that name that will identify this resource in the Metric Insights error logs. It does not have to match the name provided in the Add External Resource Configuration window Name field.scope: This scope extends the “Scope of FAQ” that is defined on the Concierge Setup page. It helps the LLM to identify the correct scenario to use this resource for user questions.endpoint: Insertsearch_endpointcopied from the Sample Code window in Step 1.6.index_name: Insertsearch_indexcopied from the Sample Code window in Step 1.6.key: Insert Azure Search Resource Key copied in Step 1.6. It can be inserted as is or as a variable (see below).deployment_name: Enter the embedding model deployment name copied in Step 1.3.

2.3. Set the API Key Variable

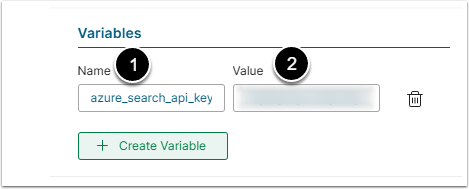

In the Variables section of the Add External Resource Configuration window click [+Create Variable].

- Name: Give the Variable a "azure_search_api_key" name.

- Value: Enter the Azure Search Resource Key copied earlier.

[Save] the Configuration, [Update All Search Indexes], and that's all. The Concierge will now answer questions about the content of indexed data.