Beginning in v7.1.2, Metric Insights provides an API proxy that allows client applications to communicate with the Concierge-configured LLM using an OpenAI-compatible API. The client application interacts with the proxy using the same syntax, request format, and client libraries as OpenAI's Chat Completions API. The proxy then translates these requests into the appropriate API calls for the LLM specified in Concierge (OpenAI, Google AI, Anthropic, etc.).

This proxy can be used as a building block for creating LLM agnostic solutions, since it provides a unified way of interacting with different language models regardless of the provider.

Key benefits:

- A consistent, OpenAI-compatible interface that works across multiple LLM providers.

- Integration of Concierge-managed LLMs into external applications and custom components without provider-specific code.

- Improved security by using Metric Insights API tokens instead of exposing provider API keys.

Unlike standard Concierge interactions, requests sent through this proxy bypass Concierge workflows and context, allowing you to query the model independently while still providing a unified way of interacting with it.

Endpoint:

-

POST /ai/oai_api/v1/chat/completions: Sends a request to the LLM defined in Concierge.

Prerequisites:

- Install and configure Concierge

- Generate a Metric Insights API token

-

NOTE: Only Metric Insights API tokens generated via

/api/get_tokenare supported.

-

NOTE: Only Metric Insights API tokens generated via

- Enable System Variable ALLOW_LLM_ACCESS_VIA_API

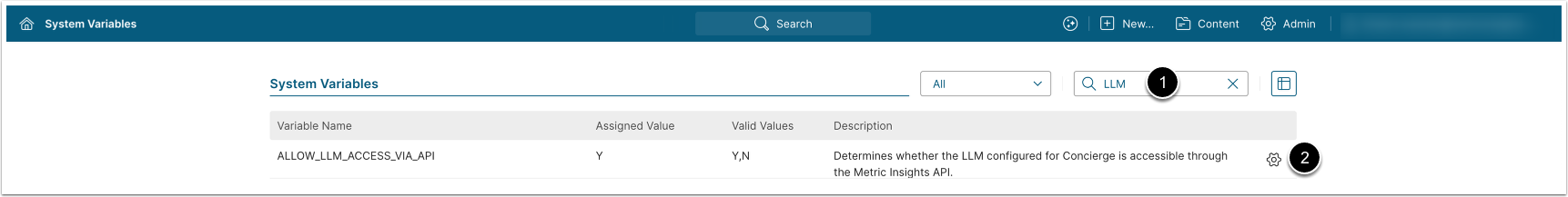

Enable System Variable ALLOW_LLM_ACCESS_VIA_API

Access Admin > System > System Variables

- Enter "LLM" in the search box

- Set

ALLOW_LLM_ACCESS_VIA_APIto "Y", then [Commit Changes]

Send Request to LLM

Use the POST /ai/oai_api/v1/chat/completions endpoint to send a user-defined request to the LLM specified in Concierge.

$.ajax({

url: "/ai/oai_api/v1/chat/completions",

type: "POST",

headers: {

"Accept": "application/json",

"Content-Type": "application/json",

"Authorization": "Bearer <Paste Metric Insights API Token here>"

},

data: JSON.stringify({

model: "<Enter LLM model>",

messages: [{ role: "user", content: "<Enter your prompt here>" }]

})

}).done(console.log).fail(console.error);Example response:

{

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "This is a sample response. Have a great day!"

},

"finish_reason": "stop"

}

]

}Retrieve Metric Insights API Token and Submit Prompt

The example below demonstrates how to:

- Retrieve a Metric Insights API token for that user via

GET /api/get_token.- NOTE: This API call must be executed by an authorised user.

- Send a request to the LLM using that token:

- Via the OpenAI client library.

- Direct HTTP request:

POST /ai/oai_api/v1/chat/completions.

<script>

const MI_HOSTNAME = "<MI hostname>"; // Replace with your Metric Insights hostname

async function demoFlow() {

try {

// 1. Get Metric Insights API token

const tokenResp = await fetch("/api/get_token", {

method: "GET",

headers: { "Content-Type": "application/json" },

});

const tokenJson = await tokenResp.json();

const jwt = tokenJson.token;

console.log("Received token:", jwt);

// 2. Send request to LLM via API Proxy

// --- A. Use OpenAI client library ---

const openAiClient = new OpenAI({

apiKey: jwt,

baseURL: `https://${MI_HOSTNAME}/ai/oai_api/v1/`,

});

const chatResp = await openAiClient.chat.completions.create({

model: "gpt-5",

messages: [

{ role: "user", content: "Tell me about Metric Insights" }

],

});

console.log("Assistant reply:", chatResp.choices[0].message.content);

// --- B: Direct HTTP request ---

const rawResp = await fetch(`/ai/oai_api/v1/chat/completions`, {

method: "POST",

headers: {

"Content-Type": "application/json",

"Authorization": "Bearer " + jwt

},

body: JSON.stringify({

model: "gpt-4.1",

messages: [

{ role: "user", content: "Tell me about Metric Insights" }

]

})

});

const rawJson = await rawResp.json();

console.log("Assistant reply (Direct HTTP):", rawJson.choices[0].message.content);

} catch (err) {

console.error("Flow failed:", err);

}

}

// Run it once the page is loaded

document.addEventListener("DOMContentLoaded", demoFlow);

</script>